Content moderation in the GameFi sector, which merges gaming with decentralized finance, presents a unique set of challenges. As this industry evolves, it faces issues related to user-generated content, regulatory compliance, and the need for effective moderation strategies to ensure a safe and enjoyable gaming environment. The rapid growth of the GameFi market—projected to reach USD 119.6 billion by 2031 with a CAGR of 29.5%—highlights the urgency for robust content moderation frameworks to manage the complexities arising from this fusion of gaming and finance.

| Key Concept | Description/Impact |

|---|---|

| Volume and Scale | The massive amount of user-generated content in GameFi platforms makes it difficult to monitor and moderate effectively, leading to potential exposure to harmful or inappropriate content. |

| Diversity of Content | GameFi encompasses various content types, including text, images, and videos, requiring moderators to be skilled in evaluating diverse formats consistently. |

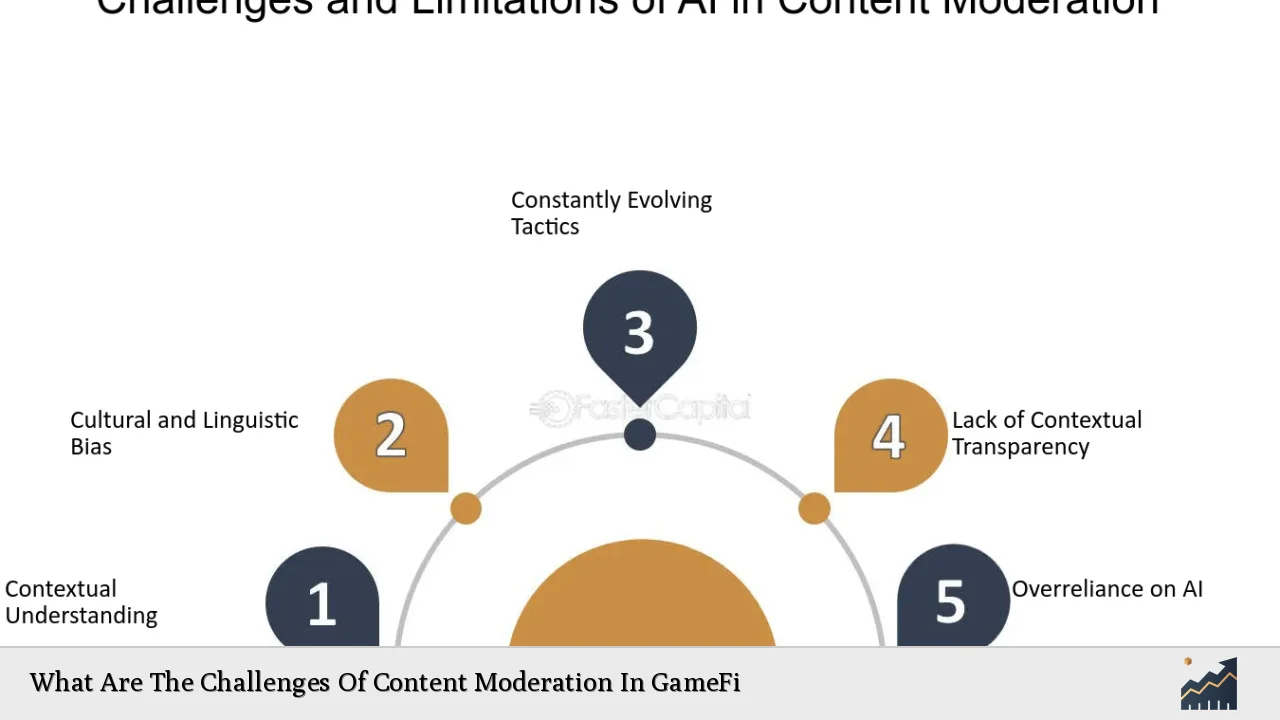

| Contextual Ambiguity | Understanding the context behind user interactions can be challenging; humor or sarcasm may lead to misinterpretations during moderation. |

| Algorithmic Bias | Automated moderation tools may exhibit biases, resulting in unfair treatment of certain users or communities. |

| Cultural Sensitivity | Global platforms must navigate cultural differences in content acceptability, complicating moderation efforts. |

| User Privacy Concerns | Moderation processes can infringe on user privacy, raising ethical concerns about data handling and user rights. |

| Regulatory Compliance | New regulations, such as the Digital Services Act (DSA), impose strict requirements on content moderation practices, increasing operational burdens on platforms. |

| Mental Health of Moderators | The exposure to toxic content can lead to burnout among moderators, necessitating support systems for their mental well-being. |

Market Analysis and Trends

The GameFi market is experiencing rapid expansion driven by the integration of blockchain technology into gaming. As of 2024, the global GameFi market size is estimated at USD 19.58 billion and is expected to grow significantly over the next decade. This growth comes with an influx of user-generated content that requires effective moderation strategies.

Current Statistics

- Market Size (2024): USD 19.58 billion

- Projected Market Size (2031): USD 119.62 billion

- CAGR (2024-2031): 29.5%

- Average Duration of GameFi Projects: Approximately 4 months

- Failure Rate: 93% of projects fail shortly after launch

This rapid growth presents significant challenges for content moderation as platforms must cope with an increasing volume of reports related to disruptive behavior and harmful content.

Implementation Strategies

To address these challenges effectively, GameFi platforms should consider implementing the following strategies:

- Automated Moderation Tools: Utilizing advanced algorithms and machine learning can help filter out inappropriate content before it reaches human moderators.

- Human Oversight: While automation can handle large volumes of data, human moderators are essential for nuanced decision-making that considers context.

- Clear Community Guidelines: Establishing transparent rules regarding acceptable behavior can help users understand expectations and reduce instances of misconduct.

- Diversity in Moderation Teams: Employing moderators from various cultural backgrounds can enhance understanding and sensitivity towards different norms and values.

Risk Considerations

The risks associated with ineffective content moderation in GameFi include:

- Reputation Damage: Failure to manage harmful content can lead to negative publicity and loss of user trust.

- Legal Liabilities: Non-compliance with emerging regulations may result in legal repercussions for platforms.

- User Safety: Inadequate moderation may expose users to harassment or toxic behavior, detracting from their gaming experience.

Regulatory Aspects

Recent regulations such as the DSA require platforms to enhance their moderation practices significantly:

- Transparency Requirements: Platforms must provide clear reasons for moderation actions taken against users.

- User Reporting Mechanisms: Effective systems must be established for users to report inappropriate content easily.

- Handling Complaints: A robust process for addressing user complaints about moderation decisions is now mandatory.

These regulations not only increase operational complexity but also require platforms to invest in training and resources for their moderation teams.

Future Outlook

As the GameFi sector continues to grow, so too will the challenges associated with content moderation. Future trends may include:

- Enhanced AI Capabilities: Continued advancements in AI could improve automated moderation effectiveness while reducing biases.

- Increased User Engagement: As more players enter the space, platforms will need scalable solutions that can adapt quickly to changing user behaviors.

- Focus on Mental Health: Recognizing the emotional toll on moderators will lead to better support systems and training programs aimed at reducing burnout.

The future landscape will require a balanced approach that prioritizes both user safety and freedom of expression while navigating regulatory complexities.

Frequently Asked Questions About Content Moderation In GameFi

- What are the main challenges faced in moderating GameFi content?

The primary challenges include managing high volumes of user-generated content, understanding diverse cultural norms, ensuring algorithmic fairness, and complying with evolving regulations. - How do platforms handle the overwhelming volume of reports?

Platforms typically use a combination of automated tools for initial filtering followed by human review for nuanced cases. - What role does AI play in content moderation?

AI assists in identifying potentially harmful content quickly but requires human oversight to ensure context is considered accurately. - How can platforms ensure compliance with new regulations?

By implementing transparent reporting mechanisms, providing clear community guidelines, and enhancing documentation processes for moderation actions. - What impact does cultural sensitivity have on moderation?

Cultural sensitivity is crucial as different regions have varying standards for acceptable content; understanding these differences helps maintain a positive gaming environment. - How do moderators cope with exposure to toxic content?

Platforms are increasingly recognizing the need for mental health support for moderators through training programs and counseling services. - What future trends might affect GameFi content moderation?

Future trends include advancements in AI technology, increased regulatory scrutiny, and a growing emphasis on user safety and mental health support for moderators. - Why is transparency important in content moderation?

Transparency builds trust between users and platforms by clarifying how decisions are made regarding content removal or restriction.

In conclusion, while the GameFi sector presents exciting opportunities at the intersection of gaming and finance, it also poses significant challenges related to content moderation. By adopting comprehensive strategies that incorporate advanced technologies alongside human oversight, platforms can create safer environments that foster positive user experiences while navigating regulatory landscapes effectively.